Back

Similar todos

Calculate token count and save GPT model used for each message to I can better track costs and usage  #marcbot

#marcbot

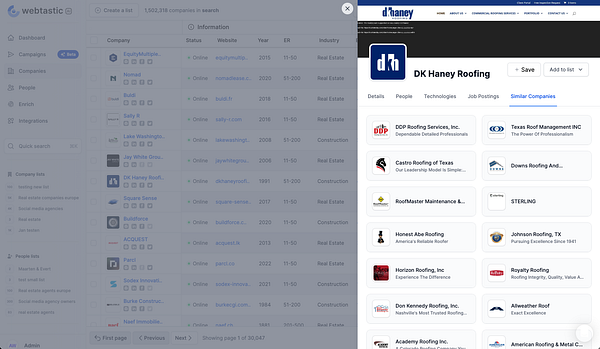

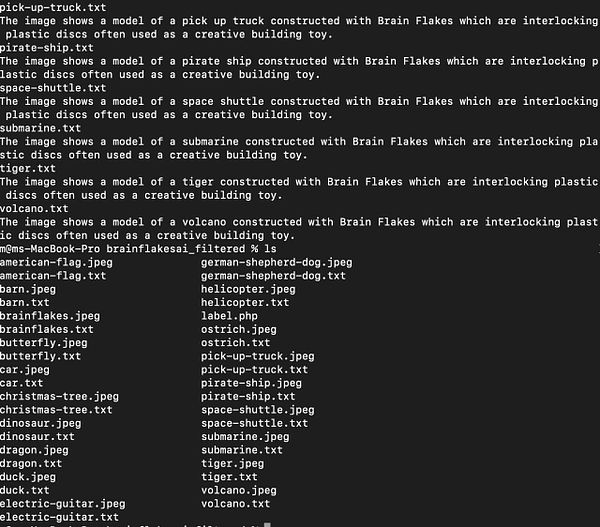

More testing, embeddings + filters seem to work very well  #sparkbase

#sparkbase

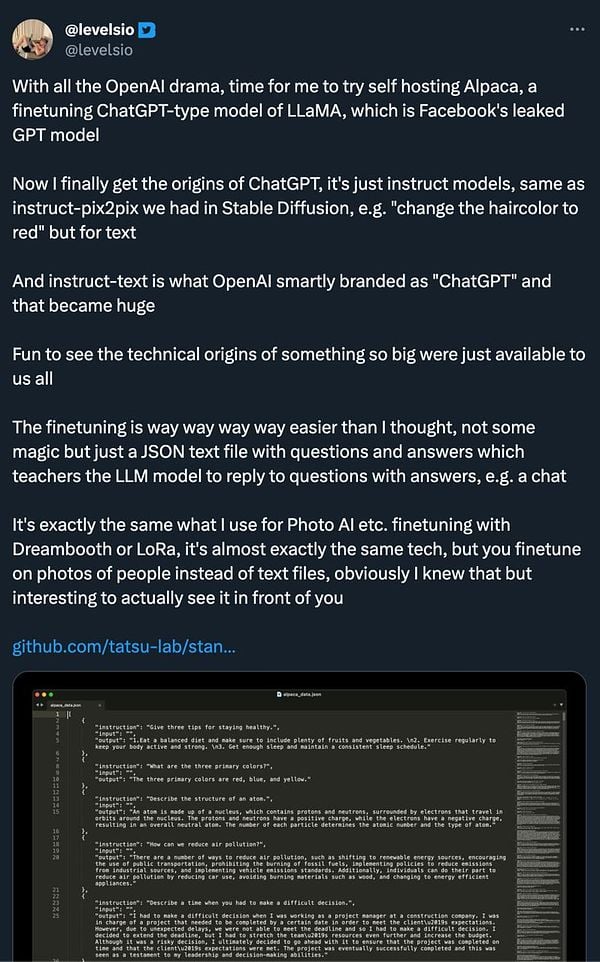

talk about LLM finetuning and Alpaca and ChatGPT being just instruct-text model  #life fxtwitter.com/levelsio/status…

#life fxtwitter.com/levelsio/status…

build math based seq2seq  #s2c

#s2c

write blog/email about my experiment with embeddings  #blog2

#blog2

Complete survey analysis with ChatGPT code explorer x.com/julian_rubisch/status/1…  #useattractor

#useattractor

GPT research and learning about ways to structure extra context

run more tests to use AI huggingface model to run over substack newsletters and identify keywords and topics  #sponsorgap

#sponsorgap

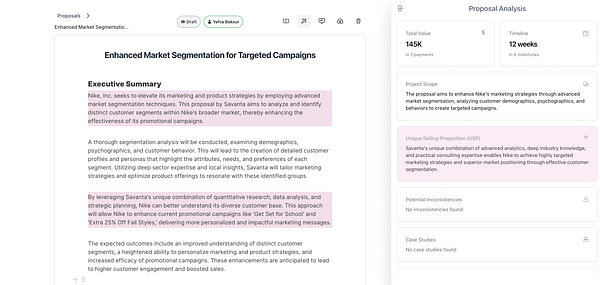

Improve AI proposal analyzer  #pitchpower

#pitchpower

use chatgpt to write script for calculating/storing embeddings for my blog  #aiplay

#aiplay

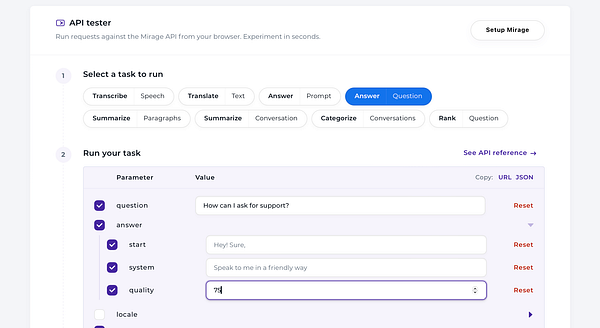

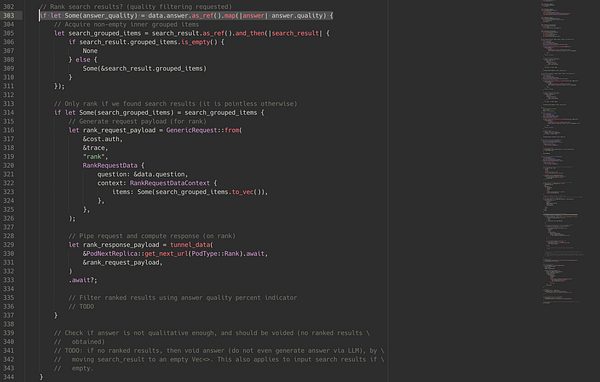

add quality filter to ai-generated content on  #crisp bot

#crisp bot

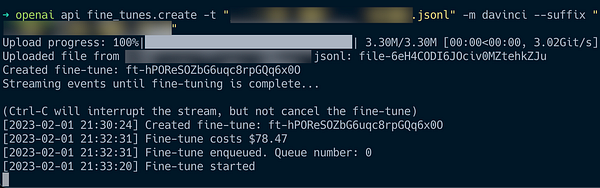

Experiment with fine-tuning GPT-3 for some programmatic SEO ideas  #startupjobs

#startupjobs

Prompt ChatGPT with custom embeddings  #life

#life

work on  #crisp ai features improvements

#crisp ai features improvements

add linkedin embeddings to the matching algo  #aiplay

#aiplay

Adding document analysis support for BoltAI  #boltai

#boltai

generate skill dimensions for testing/evaluating language acquisition  #lengua

#lengua