Back

Similar todos

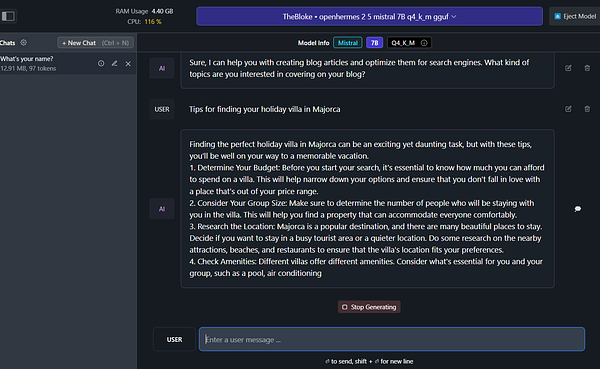

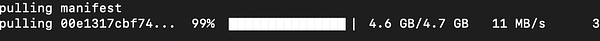

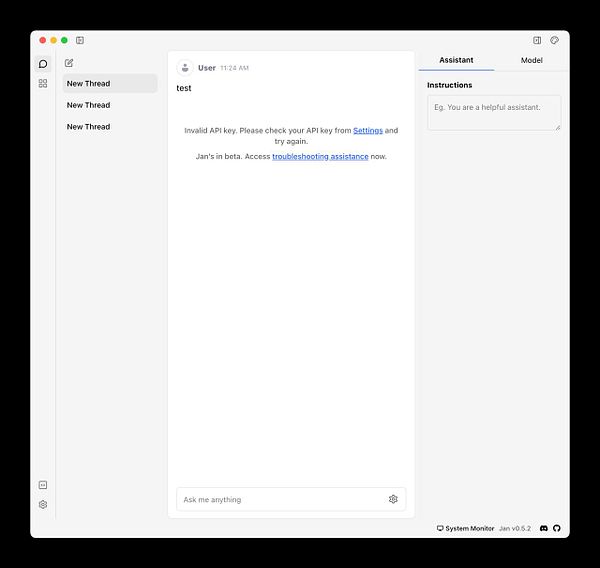

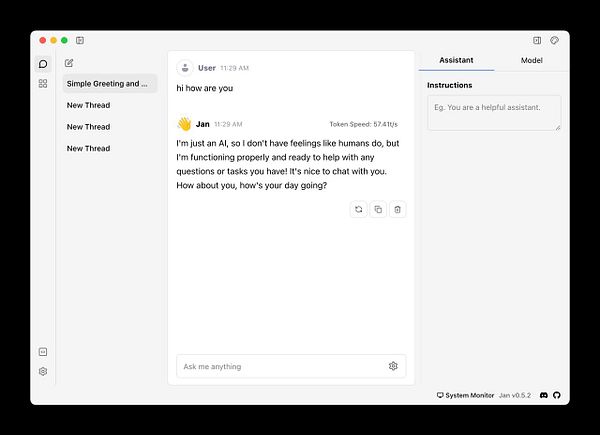

Playing with llama2 locally and running it from the first time on my machine

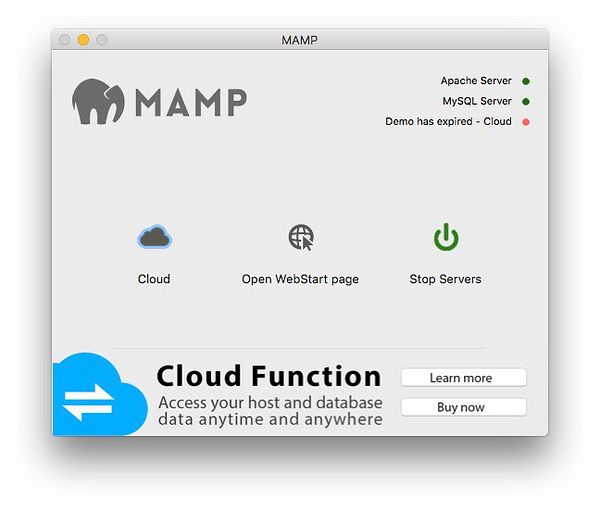

Starting up local server #olisto

✏️ wrote about running Llama 3.1 locally through Ollama on my Mac Studio. micro.webology.dev/2024/07/24…

Get Stable Diffusion (open-source DALL-E clone) up and running on my Mac  #life

#life

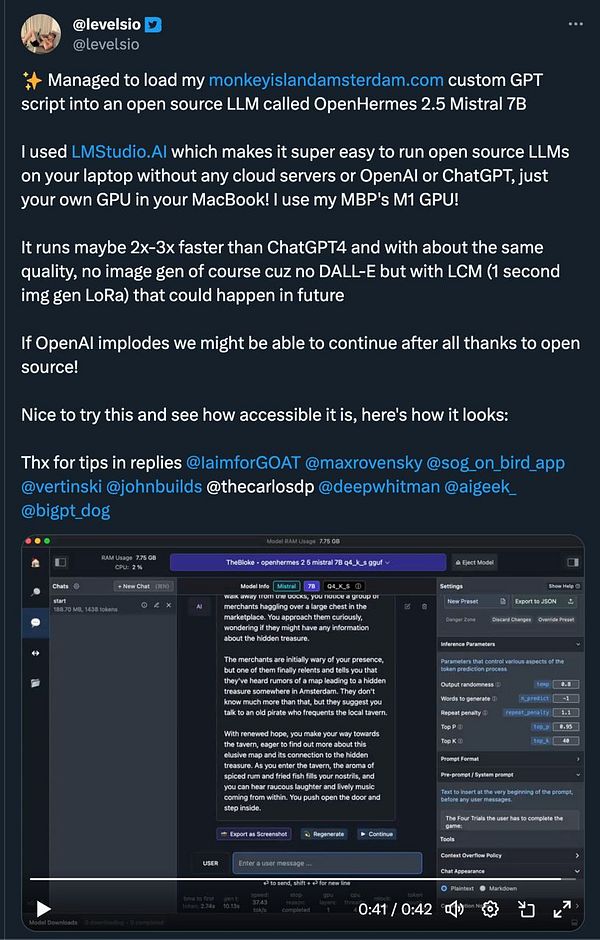

try load  #monkeyisland in my own local LLM

#monkeyisland in my own local LLM

try client side web based Llama 3 in JS  #life webllm.mlc.ai/

#life webllm.mlc.ai/

ollama is worth using if you have an M1/M2 mac and want a speedy way to access the various llama2 models.

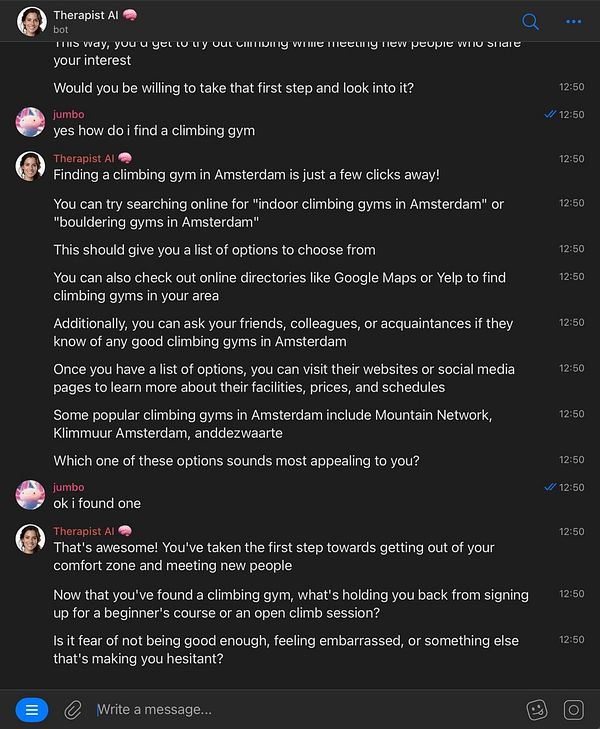

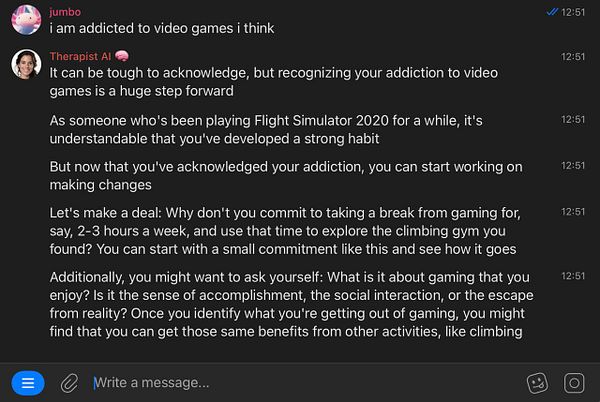

deploy Llama3-70B on  #therapistai for me

#therapistai for me

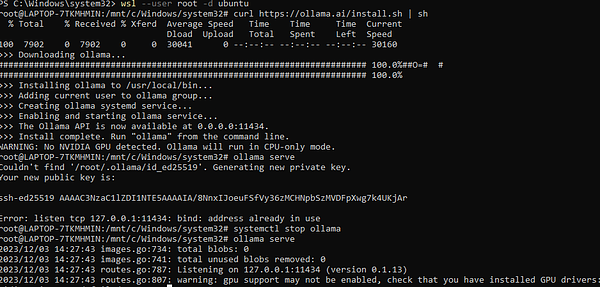

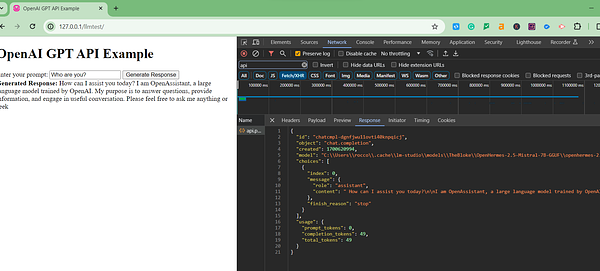

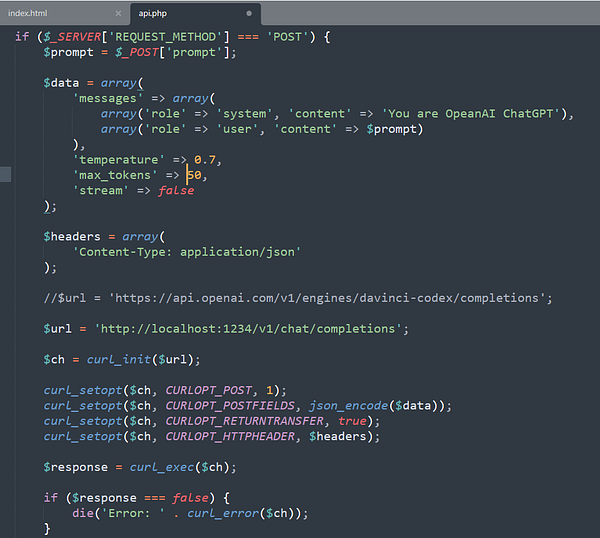

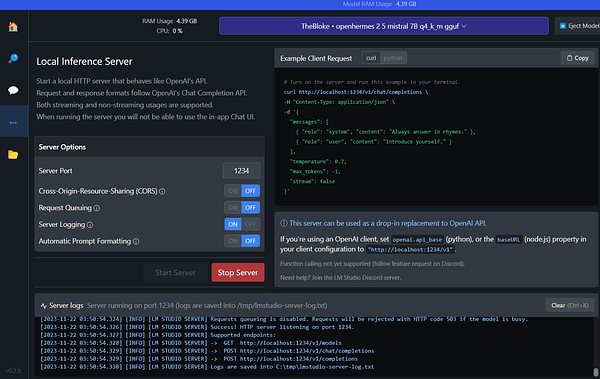

Ran some local LLM tests 🤖

🤖 got llama-cpp running locally 🐍

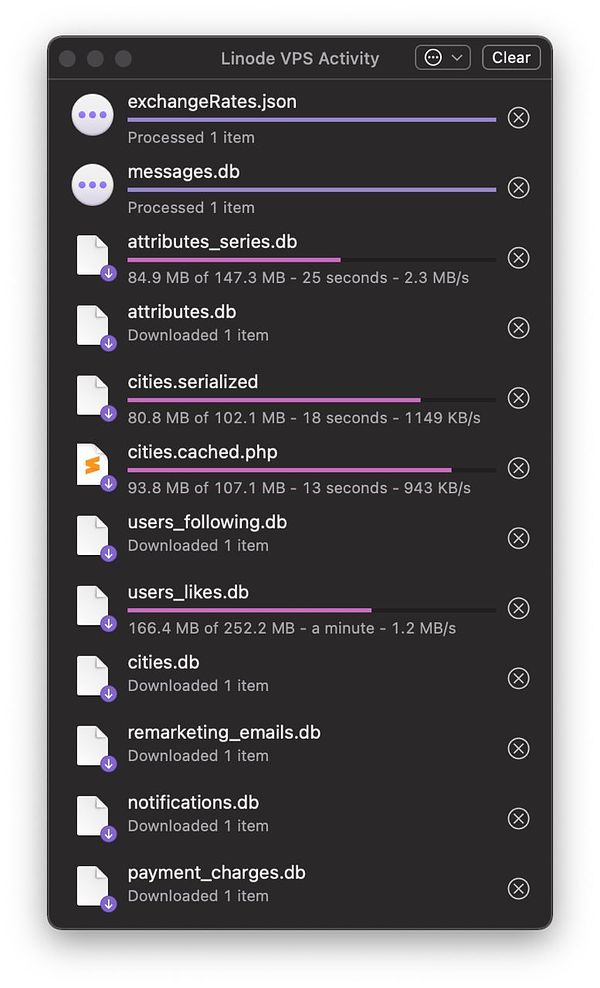

setting up local server for localization  #apimocka

#apimocka