Back

Similar todos

Run openllm dollyv2 on local linux server

read up on llm embedding to start building something new with ollama

Playing with llama2 locally and running it from the first time on my machine

✏️ wrote about running Llama 3.1 locally through Ollama on my Mac Studio. micro.webology.dev/2024/07/24…

Starting up local server #olisto

🤖 Tried out Llama 3.3 and the latest Ollama client for what feels like flawless local tool calling.  #research

#research

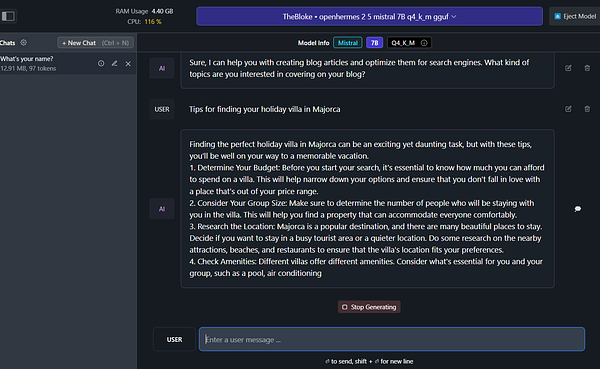

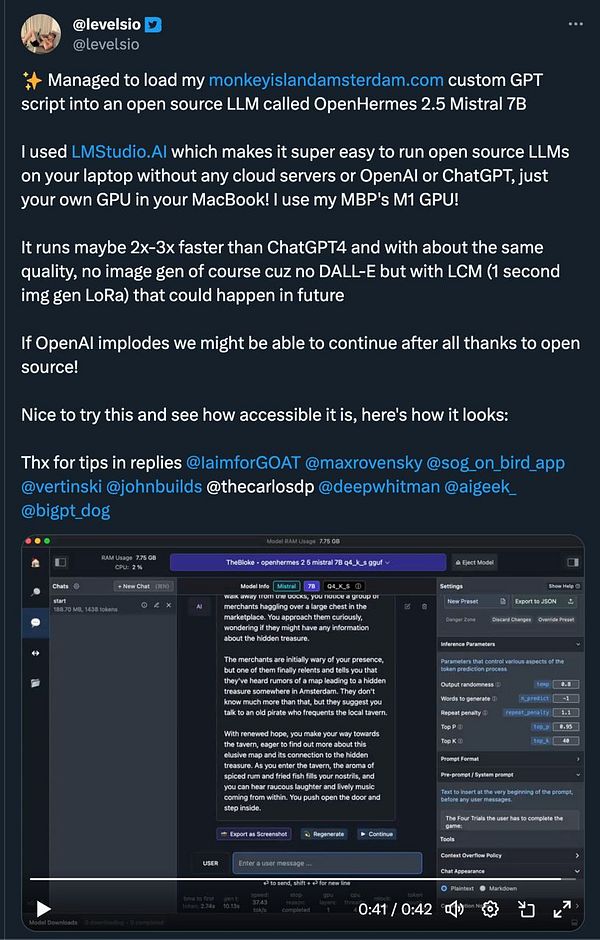

try load  #monkeyisland in my own local LLM

#monkeyisland in my own local LLM

implemented ollama local models  #rabbitholes

#rabbitholes

More Ollama experimenting  #research

#research

Just finished and published a web interface for Ollama  #ollamachat

#ollamachat

prototype a simple autocomplete using local llama2 via Ollama  #aiplay

#aiplay

Setup new local wordpress install #olisto

Setup new local WP install #olisto

✏️ I wrote and published my notes on using the Ollama service micro.webology.dev/2024/06/11…

installed cody to cursor, so that i can use llama3.1 and gemma2 via ollama  #astronote

#astronote  #leifinlavida

#leifinlavida

got llamacode working locally and it's really good

Ran some local LLM tests 🤖