Back

Similar todos

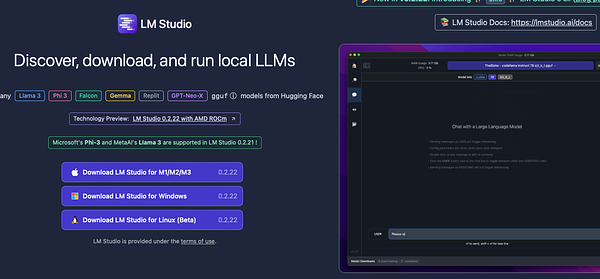

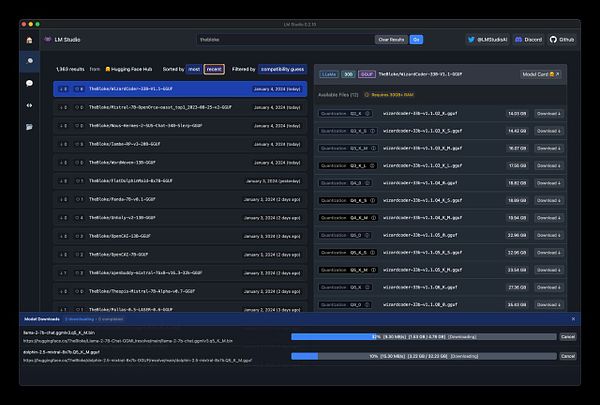

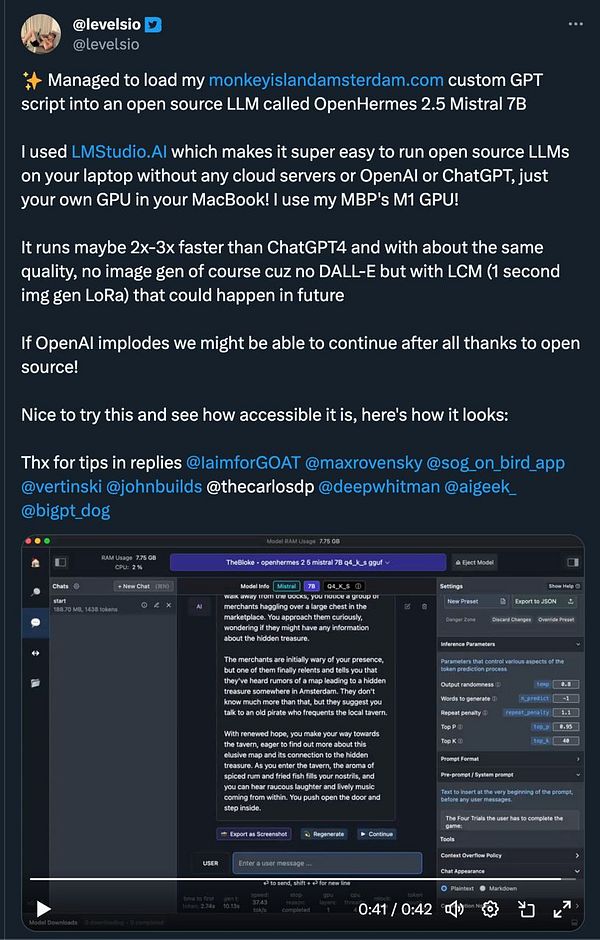

test OpenHermes 2.5 Mistral LLM

Run openllm dollyv2 on local linux server

try load  #monkeyisland in my own local LLM

#monkeyisland in my own local LLM

Playing with llama2 locally and running it from the first time on my machine

✏️ wrote about running Llama 3.1 locally through Ollama on my Mac Studio. micro.webology.dev/2024/07/24…

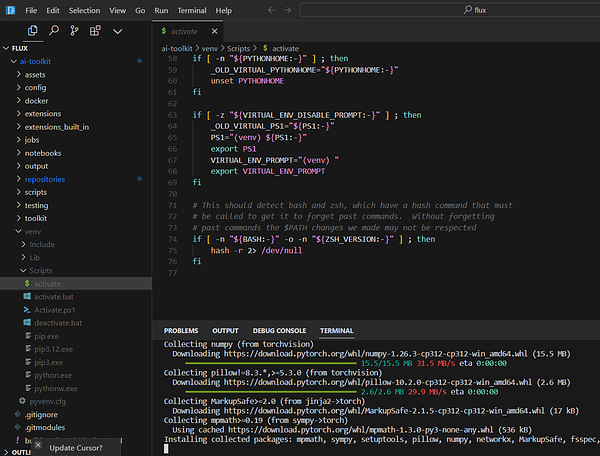

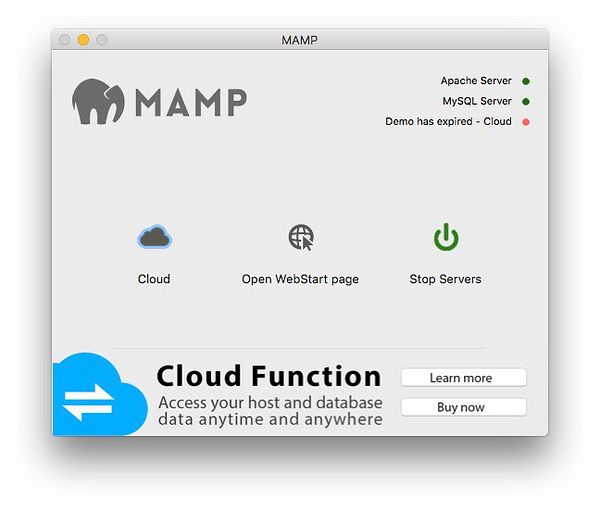

work on setting up the system locally #labs

Starting up local server #olisto

📝 prototyped an llm-ollama plugin tonight. models list and it talks to the right places. prompts need more work.

local project setup  #keyframes

#keyframes

setup project on local #lite

Ran some local LLM tests 🤖

Trying NotebookLM

try client side web based Llama 3 in JS  #life webllm.mlc.ai/

#life webllm.mlc.ai/

install laravel project and setup

setup new l5 project (maybe spark)

read up on llm embedding to start building something new with ollama