Back

Similar todos

check out Llama 3.1  #life

#life

✏️ wrote about running Llama 3.1 locally through Ollama on my Mac Studio. micro.webology.dev/2024/07/24…

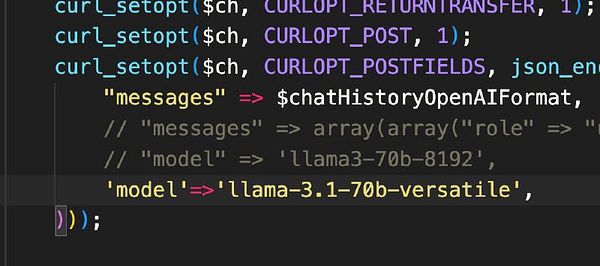

switch  #therapistai to Llama 3.1

#therapistai to Llama 3.1

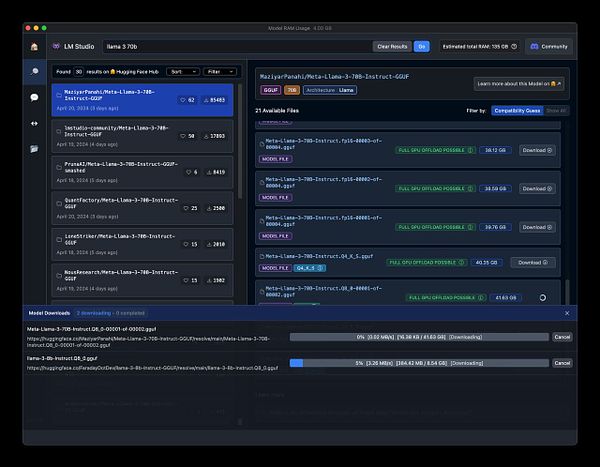

Over the past three days and a half, I've dedicated all my time to developing a new product that leverages locally running Llama 3.1 for real-time AI responses.

It's now available on Macs with M series chips – completely free, local, and incredibly fast.

Get it: Snapbox.app  #snapbox

#snapbox

Playing with llama2 locally and running it from the first time on my machine

buy mac mini m2 for video production  #fajarsiddiq

#fajarsiddiq

Read the up on MLX for Apple Silicon.

installed cody to cursor, so that i can use llama3.1 and gemma2 via ollama  #astronote

#astronote  #leifinlavida

#leifinlavida

more fun with LLAMA2 and figuring out how to better control/predict stable output

try client side web based Llama 3 in JS  #life webllm.mlc.ai/

#life webllm.mlc.ai/

Run openllm dollyv2 on local linux server

got llamacode working locally and it's really good

probably gonna order a macbook pro 14 M2

prototype a simple autocomplete using local llama2 via Ollama  #aiplay

#aiplay

Got Stable Diffusion XL working on M1 Macbook Pro.

format macbook  #life

#life