Back

Similar todos

Load previous page…

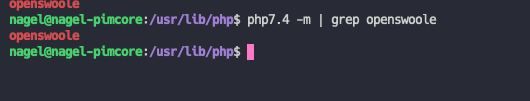

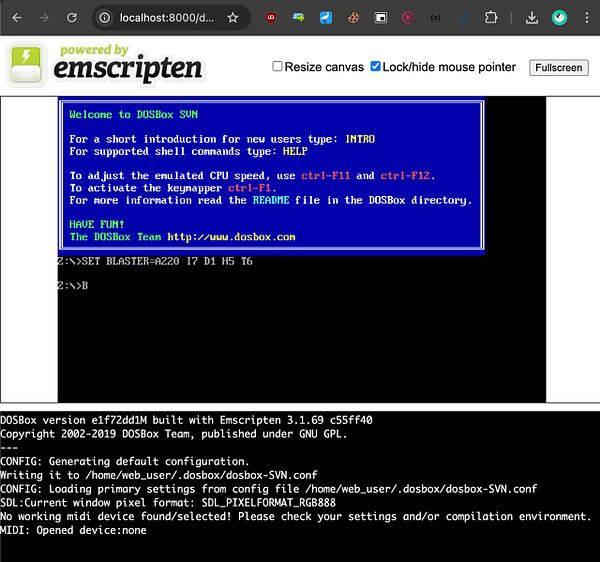

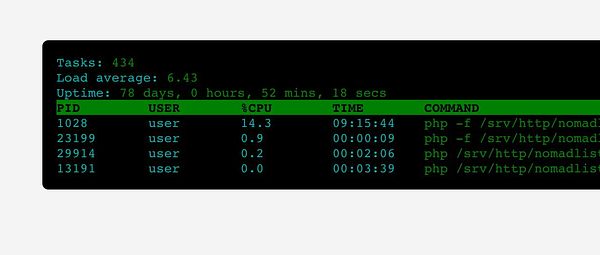

set up linux VM for testing

Got whatdo and lets-play running locally

setup local ssl environement  #pocketpager

#pocketpager

setup local  #chocolab dev environment

#chocolab dev environment

Shipped BoltAI v1.13.6, use AI Command with local LLMs via Ollama 🥳  #boltai

#boltai

Run Telegram client in cloud  #telepost

#telepost

got llamacode working locally and it's really good

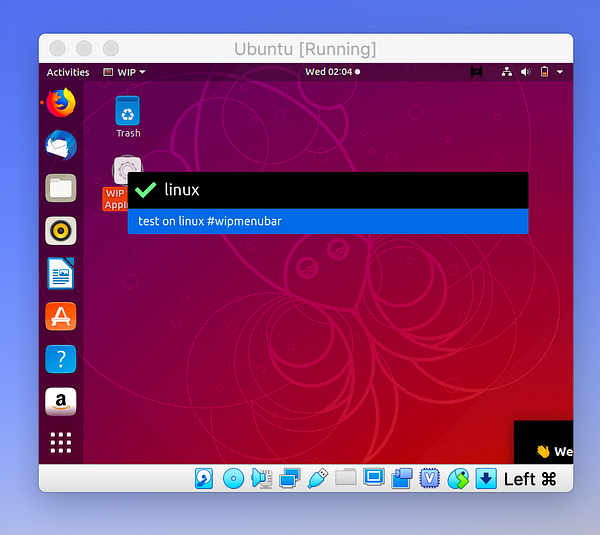

test on linux  #menubar

#menubar

Over the past three days and a half, I've dedicated all my time to developing a new product that leverages locally running Llama 3.1 for real-time AI responses.

It's now available on Macs with M series chips – completely free, local, and incredibly fast.

Get it: Snapbox.app  #snapbox

#snapbox

setup local dev env  #sanderfish

#sanderfish

setup local dev env  #chime

#chime

setup linode server for client  #freelancegig

#freelancegig