Back

Similar todos

🤖 Tried out Llama 3.3 and the latest Ollama client for what feels like flawless local tool calling.  #research

#research

🤖 got llama-cpp running locally 🐍

Playing with llama2 locally and running it from the first time on my machine

✏️ wrote about running Llama 3.1 locally through Ollama on my Mac Studio. micro.webology.dev/2024/07/24…

fun saturday: Set up local LLM coding assistant and local voice transcription on my M1, for use when wifi is unavailable

livecode getting a HAI WORLD lolcode compiler working

played with symbex and llm to generate code. super cool and immediately useful

prototype a simple autocomplete using local llama2 via Ollama  #aiplay

#aiplay

check out Llama 3.1  #life

#life

livecode trying out NextJS  #codewithswiz

#codewithswiz

Ran some local LLM tests 🤖

livecode finishing the lovebox build  #codewithswiz

#codewithswiz

livecode more webrtc stuff

Over the past three days and a half, I've dedicated all my time to developing a new product that leverages locally running Llama 3.1 for real-time AI responses.

It's now available on Macs with M series chips – completely free, local, and incredibly fast.

Get it: Snapbox.app  #snapbox

#snapbox

got llama3 on groq working with cursor 🤯

try client side web based Llama 3 in JS  #life webllm.mlc.ai/

#life webllm.mlc.ai/

livecode more webrtc fun having

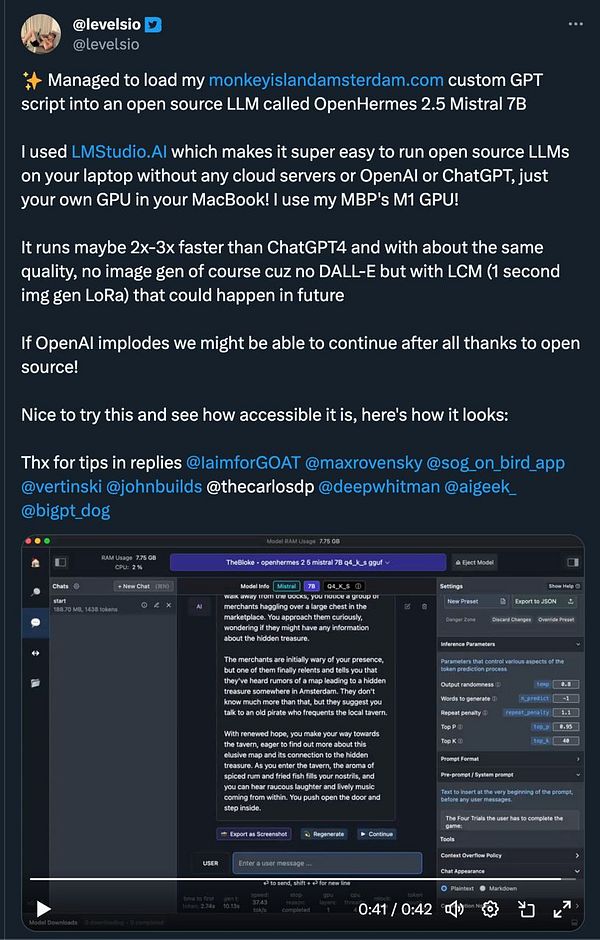

try load  #monkeyisland in my own local LLM

#monkeyisland in my own local LLM

livecode even more WebRTC stuff