Back

Similar todos

Load previous page…

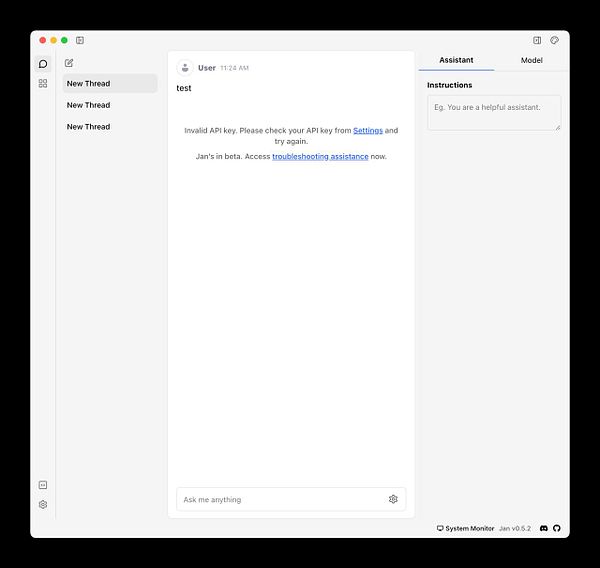

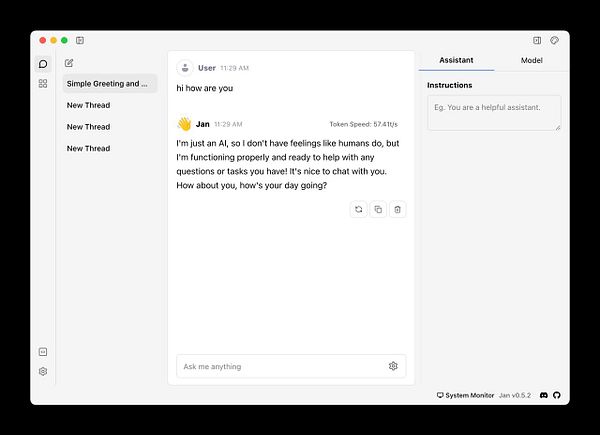

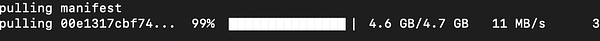

ollama is worth using if you have an M1/M2 mac and want a speedy way to access the various llama2 models.

livecode even more WebRTC stuff

quick livecode; get useAuth almost working as a lib

livecode some basic gatsby setup  #serverlesshandbook

#serverlesshandbook

livecode some research  #learnwhileyoupoop

#learnwhileyoupoop

livecode some progress  #threadcompiler

#threadcompiler

get a NoCode client #labs

livecode progress on my little CMS  #codewithswiz

#codewithswiz

more fun with LLAMA2 and figuring out how to better control/predict stable output

livecode messing around with Remix

livecode messing around with nextjs  #codewithswiz

#codewithswiz