Back

Similar todos

implemented ollama local models  #rabbitholes

#rabbitholes

🤖 Tried out Llama 3.3 and the latest Ollama client for what feels like flawless local tool calling.  #research

#research

📝 prototyped an llm-ollama plugin tonight. models list and it talks to the right places. prompts need more work.

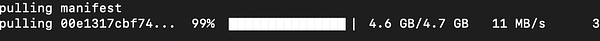

Playing with llama2 locally and running it from the first time on my machine

try client side web based Llama 3 in JS  #life webllm.mlc.ai/

#life webllm.mlc.ai/

read up on llm embedding to start building something new with ollama

✏️ wrote about running Llama 3.1 locally through Ollama on my Mac Studio. micro.webology.dev/2024/07/24…

installed cody to cursor, so that i can use llama3.1 and gemma2 via ollama  #astronote

#astronote  #leifinlavida

#leifinlavida

🤖 played with Aider and it mostly working with Ollama + Llama 3.1  #research

#research

check out Llama 3.1  #life

#life

got llamacode working locally and it's really good

🤖 played around with adding extra context in some local Ollama models. Trying to test out some real-world tasks I'm tired of doing.  #research

#research

🤖 played with Ollama's tool calling with Llama 3.2 to create a calendar management agent demo  #research

#research

more fun with LLAMA2 and figuring out how to better control/predict stable output

🤖 Updated some scripts to use Ollama's latest structured output with Llama 3.3 (latest) and fell back to Llama 3.2. I drop from >1 minute with 3.3 down to 2 to 7 seconds per request with 3.2. I can't see a difference in the results. For small projects 3.2 is the better path.  #research

#research

🤖 more working with Ollama and Llama 3.1 and working on a story writer as a good enough demo.  #research

#research

🤖 Created an Ollama + Llama 3.2 version of my job parser to compare to ChatGPT. It's not bad at all, but not as good as GPT4.  #jobs

#jobs