Back

Similar todos

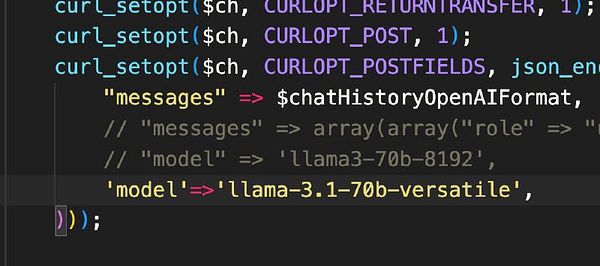

✏️ wrote about running Llama 3.1 locally through Ollama on my Mac Studio. micro.webology.dev/2024/07/24…

🤖 Tried out Llama 3.3 and the latest Ollama client for what feels like flawless local tool calling.  #research

#research

check out Llama 3.1  #life

#life

🤖 got llama-cpp running locally 🐍

try client side web based Llama 3 in JS  #life webllm.mlc.ai/

#life webllm.mlc.ai/

fun saturday: Set up local LLM coding assistant and local voice transcription on my M1, for use when wifi is unavailable

🤖 lots of AI research last night including writing a functional story bot to wrap my head around how to apply step-by-step logic and get something meaningful out of llama2  #research

#research

🤖 Updated some scripts to use Ollama's latest structured output with Llama 3.3 (latest) and fell back to Llama 3.2. I drop from >1 minute with 3.3 down to 2 to 7 seconds per request with 3.2. I can't see a difference in the results. For small projects 3.2 is the better path.  #research

#research

got llamacode working locally and it's really good

Playing with llama2 locally and running it from the first time on my machine

🤖 more working with Ollama and Llama 3.1 and working on a story writer as a good enough demo.  #research

#research

🤖 running ~600 videos through mlx-whisper (only macOS whisper library I could find that supports a GPU) to let it run overnight.  #djangotv

#djangotv

🤖 played with Aider and it mostly working with Ollama + Llama 3.1  #research

#research

Read the up on MLX for Apple Silicon.

switch  #therapistai to Llama 3.1

#therapistai to Llama 3.1

🤖 got sweep running on my Mac Studio and spent $0.73 letting it write five pull requests to try it out. Not bad.