Back

Similar todos

Load previous page…

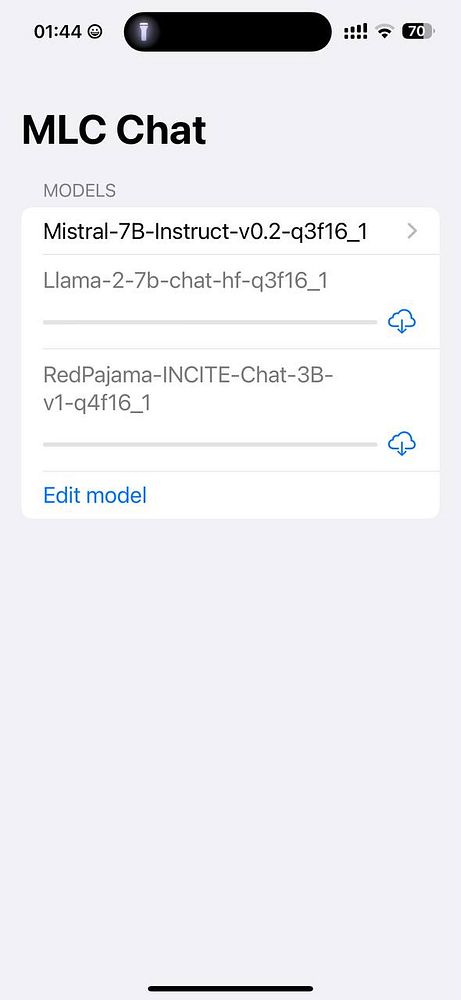

Playing with llama2 locally and running it from the first time on my machine

fun saturday: Set up local LLM coding assistant and local voice transcription on my M1, for use when wifi is unavailable

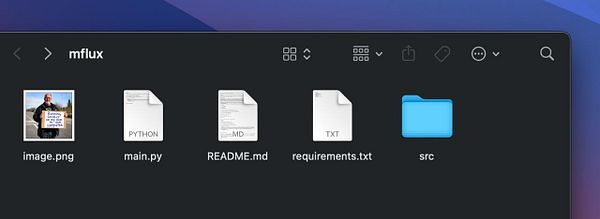

Get Flux running locally  #life

#life

Installed and familiarize with Local macos app to work on WP theme locally  #rebuildinpublic

#rebuildinpublic

🤖 Tried out Llama 3.3 and the latest Ollama client for what feels like flawless local tool calling.  #research

#research

Working on local first  #rabbitholes

#rabbitholes

setup local env #pressapps

setting up local server for localization  #apimocka

#apimocka

setup local testing via python-lambda-local

trying to repro weird bug with matching algo but run into trouble running app locally  #aiplay

#aiplay

setup local  #chocolab dev environment

#chocolab dev environment

Get new client project up and running locally  #matterloop

#matterloop

learn that I need to run a local web server to start playing with the supabase API on my website  #ramsay

#ramsay

Trying NotebookLM

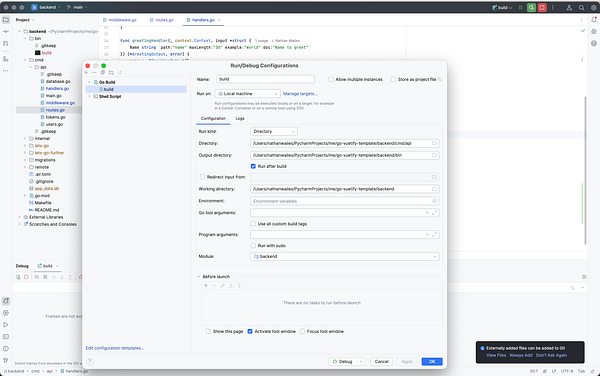

Got the GoLand debugger working with my web app backend, it was super easy, I just had to switch the configuration "Run kind" from Package to Directory and point it at /cmd/api/

got llamacode working locally and it's really good

🤖 played with Ollama's tool calling with Llama 3.2 to create a calendar management agent demo  #research

#research