Back

Similar todos

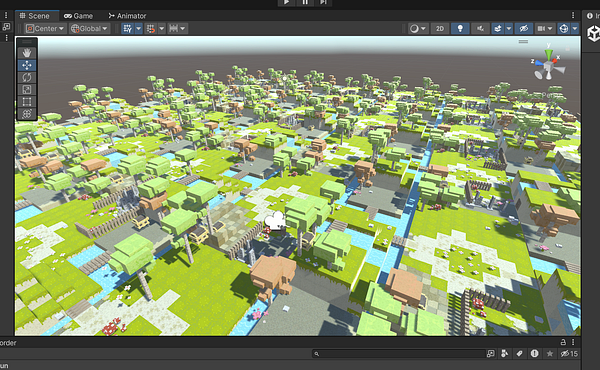

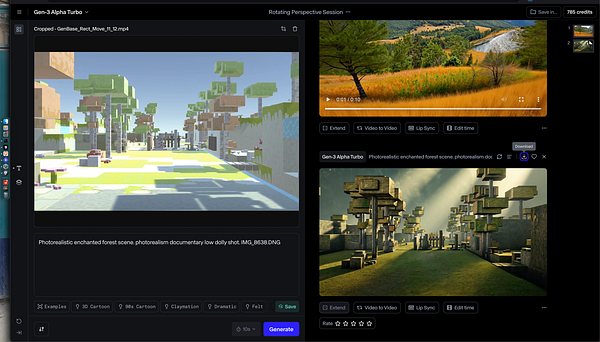

try more methods of generative worlds, but nothing good -- closest is 3d env+render, then vid<>vid  #3dmystore

#3dmystore

import new 360 video dataset & realize 360 image textures are rendering inverse, so fix sphere/texture issue  #mused

#mused

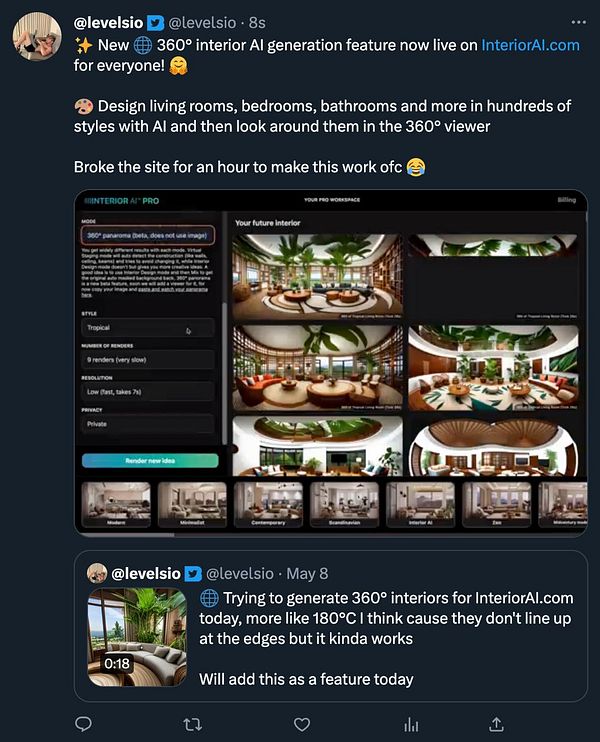

generate 360° interiors with  #interiorai

#interiorai

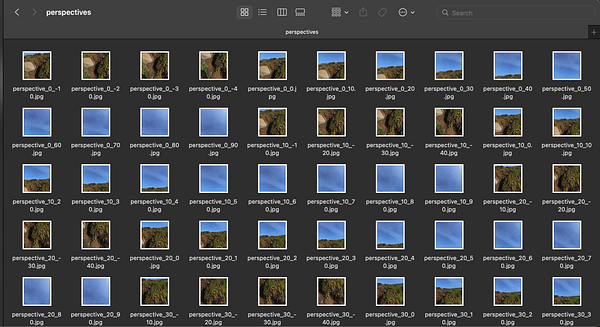

test new method eq 2 perspectives for processing 360 video frames  #mused

#mused

Try generating video #multiverse

Get each step from 360 video >> virtual tour working & spend sick/jetlagged days solving a ton of bugs in interface aligning 360 data to env, virtual tour ui, etc  #spaceshare

#spaceshare

deliver 360 videos to a customer  #videoaime

#videoaime

beta homebrew virtual tour from 360 video input  #mused

#mused

for google-street-view like transition fx between 360s, render cuberendertarget as envMap on material on 3d scan, will display not like this but in transition as user moves between 360 image nodes  #mused

#mused

fail w/medium dataset from 360 video (~6,000 images) like small, but full requires too much memory, next strategy is render each input separately  #mused

#mused

export orthomosaics for researchers from 3d build from 360 video  #mused

#mused

deploy 360° interior generation  #interiorai

#interiorai

try to make a generative 3d world from video, just simple arrow key movement  #3dmystore

#3dmystore

test conversions of 360 images to rectilinear for making splats, about 80 v&h fov gives best result so far  #mused

#mused

Making some 3D scenes  #morflaxstudio

#morflaxstudio

render first user-submitted video to neural radiance field virtual tour  #mused

#mused