Back

Similar todos

render out two videos from neural networks on tiny datasets to test new method of capture & pipeline  #mused

#mused

w/larger datasets, test lowering number images to sample from, but results too blurry to be usable :/ so go back to lowering fps of input data  #mused

#mused

test & fail 3d environment reconstruction from only 50 images instead of video  #mused

#mused

test pipeline choosing least blurry image out of every 120 frames for smaller datasets  #mused

#mused

render from acropolis small model & start processing data for full  #mused

#mused

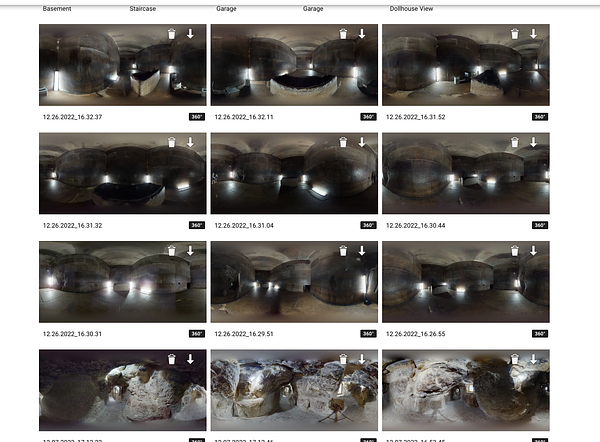

try generating 360 video scenes (using vid<>vid from existing 360 video rendered from Unity), not great results  #3dmystore

#3dmystore

training on full dataset is taking too long so export just the Kings Chamber 360s for testing  #mused

#mused

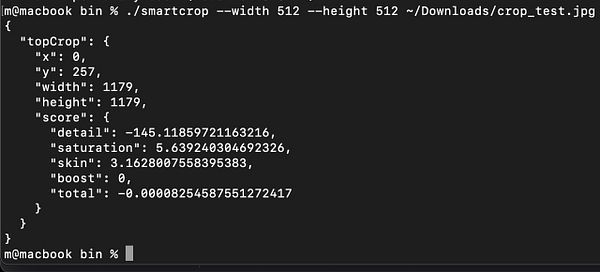

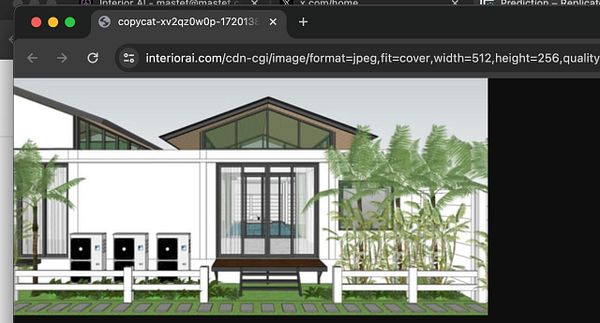

fix memory bug  #interiorai by scaling input image smaller

#interiorai by scaling input image smaller

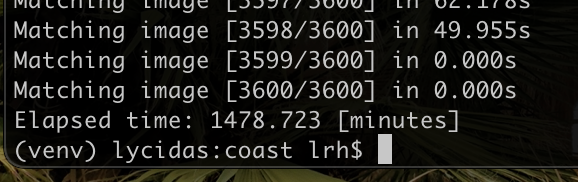

finish processing and aligning data for 360 images for big sur nerf, total about 28 hours (last step 24)  #mused

#mused

Experiment with rendering performance  #morflaxstudio

#morflaxstudio

import new 360 video dataset & realize 360 image textures are rendering inverse, so fix sphere/texture issue  #mused

#mused

Experimenting with faster process to create videos  #theportal

#theportal

new method for tighter control of transitions between 360 images for walking around  #mused

#mused

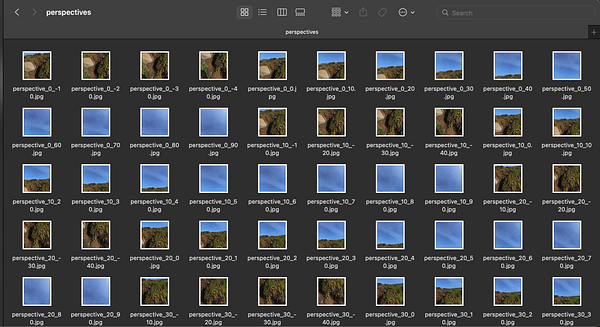

test new method eq 2 perspectives for processing 360 video frames  #mused

#mused