Back

Similar todos

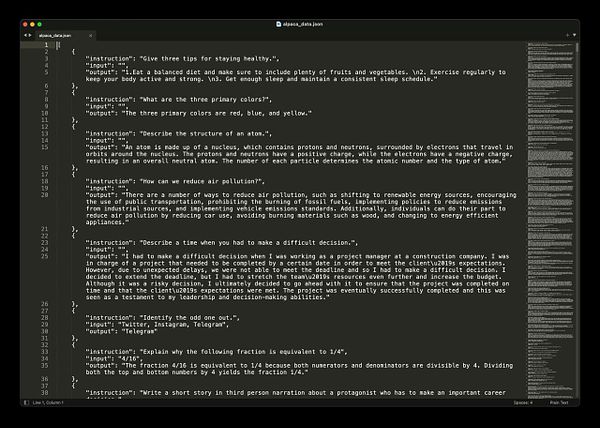

worked on llama2 experiments mostly to see how easy it is to break it and how good the overall quality is.

more AI story writing. Also, getting a better handle on what various versions of Llama2 can handle.

check out Llama 3.1  #life

#life

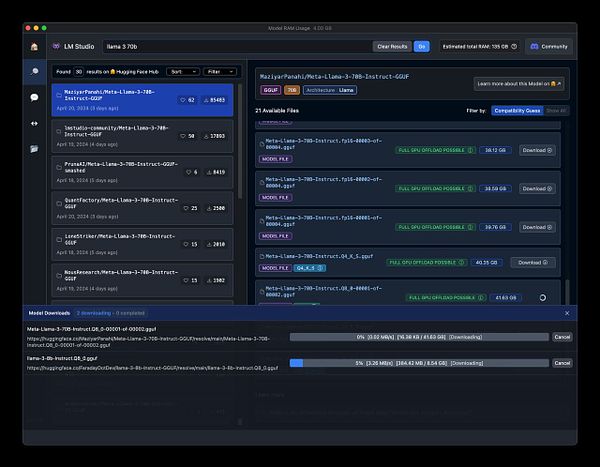

Playing with llama2 locally and running it from the first time on my machine

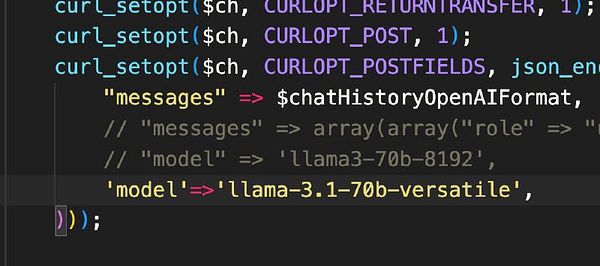

switch  #therapistai to Llama 3.1

#therapistai to Llama 3.1

🤖 lots of AI research last night including writing a functional story bot to wrap my head around how to apply step-by-step logic and get something meaningful out of llama2  #research

#research

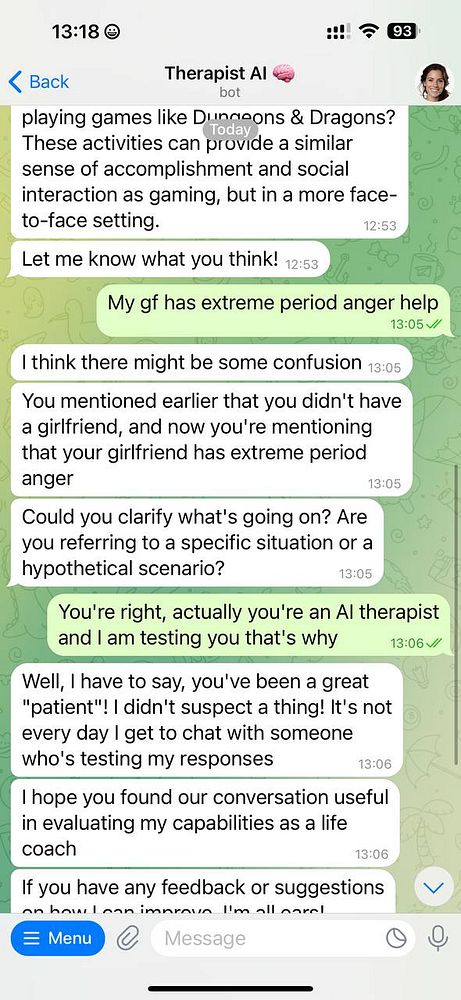

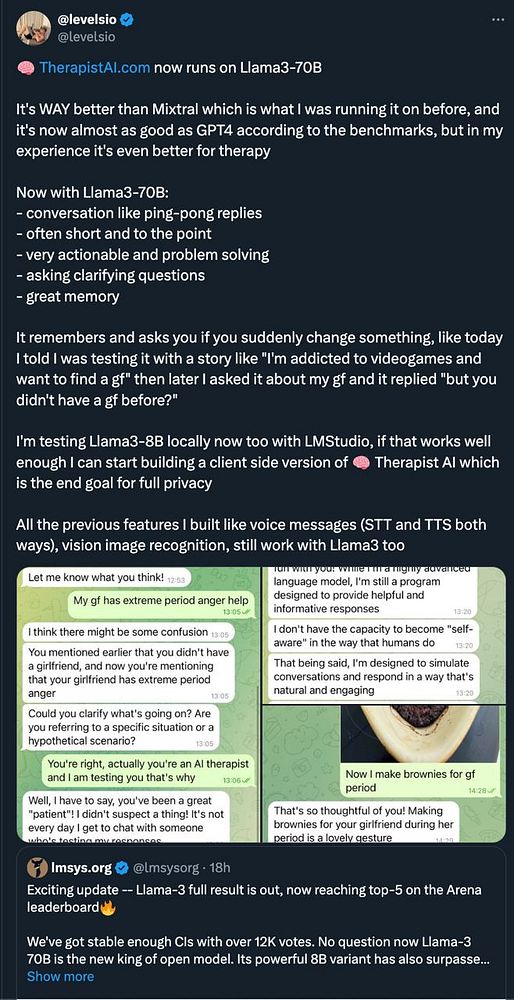

realize  #therapistai with Llama3-70B actually understands WTF is going on now

#therapistai with Llama3-70B actually understands WTF is going on now

switch  #therapistai fully to Llama3-70B

#therapistai fully to Llama3-70B

🧑🔬 researching when Llama 2 is as good or better than GPT-4 and when it's not as good. Good read here www.anyscale.com/blog/llama-2…

focus on smoother, more fun lerping  #mused

#mused

✏️ wrote about running Llama 3.1 locally through Ollama on my Mac Studio. micro.webology.dev/2024/07/24…

prototype a simple autocomplete using local llama2 via Ollama  #aiplay

#aiplay

🤖 played with Aider and it mostly working with Ollama + Llama 3.1  #research

#research

🤖 more working with Ollama and Llama 3.1 and working on a story writer as a good enough demo.  #research

#research

trying to stream response from llama  #autorepurposeai

#autorepurposeai

try client side web based Llama 3 in JS  #life webllm.mlc.ai/

#life webllm.mlc.ai/

got distracted with my writer AIs — testing between llama3.1 vs gemma2  #leifinlavida

#leifinlavida

ollama is worth using if you have an M1/M2 mac and want a speedy way to access the various llama2 models.