Back

Similar todos

Chunk dataset big files and import to DB (Data Science project)  #zg

#zg

did more Meilisearch hacking and I can't figure out why ~280M of data in turns into a 6G index which is way too much space for the contents. Search and facets work really well though so maybe it's worth while?

🔢 found a few dataset which fills in a ton of gaps  #conf

#conf

store crawler batch info data #dvlpht

figure out why dataset was weird  #reactd32018

#reactd32018

explore streaming data in chunks  #imessage

#imessage

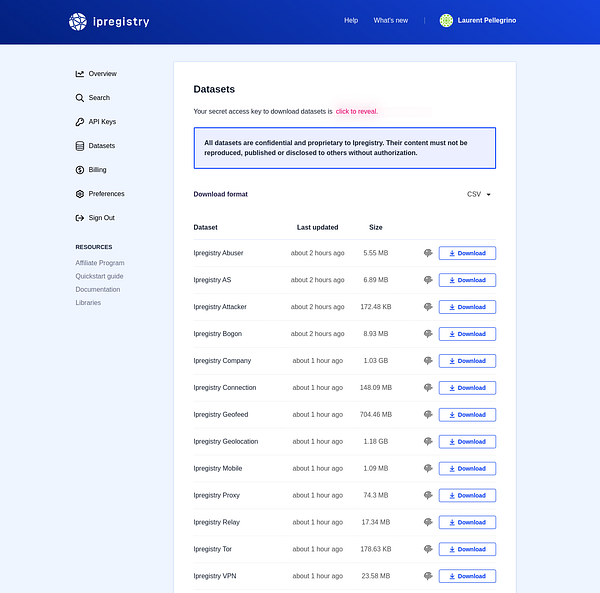

Refine page to download datasets  #ipregistry

#ipregistry

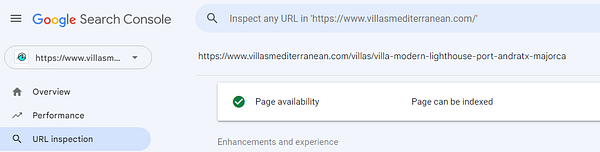

Requests indexing on GSC  #villasmediterranean

#villasmediterranean

upload harvard dataset

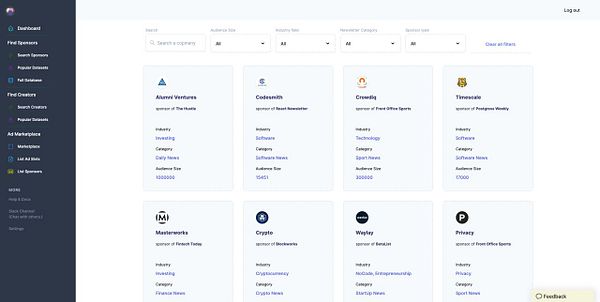

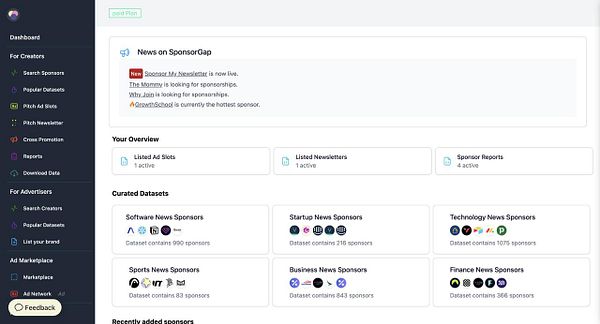

deployed search, filter and pagination for  #sponsorgap database

#sponsorgap database

Reach 65,000,000 database rows 🔥  #sparkbase

#sparkbase

increased download volume of datasets  #sponsorgap as asked by customer

#sponsorgap as asked by customer

build a demo search within  #skillmap using a generic dataset for indices

#skillmap using a generic dataset for indices

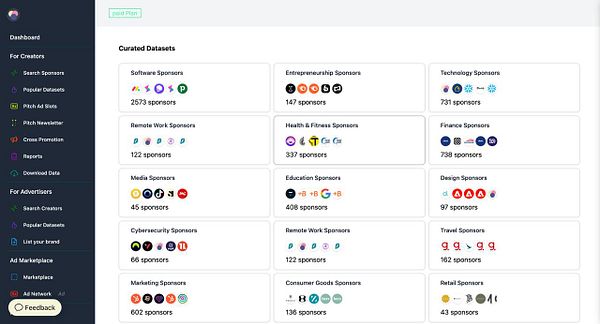

added more curated datasets to  #sponsorgap

#sponsorgap

added curated datasets to  #sponsorgap

#sponsorgap

got actually working dataset for clv  #ml4all

#ml4all

Extracted more than 35 million domain names out of crawled dataset 💪 Now I need to check if it matches the complete internet 😅  #alldomains

#alldomains