Back

Similar todos

check out Llama 3.1  #life

#life

wrote a guide on llama 3.2  #getdeploying

#getdeploying

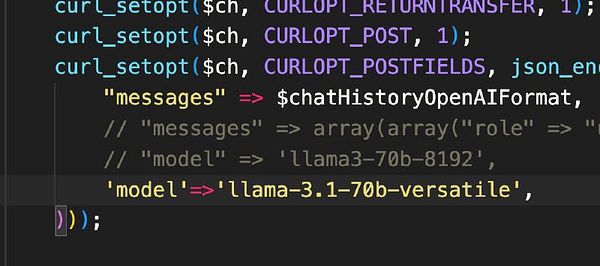

switch  #therapistai to Llama 3.1

#therapistai to Llama 3.1

read llama guard paper  #aiplay

#aiplay

🤖 Tried out Llama 3.3 and the latest Ollama client for what feels like flawless local tool calling.  #research

#research

✏️ wrote about running Llama 3.1 locally through Ollama on my Mac Studio. micro.webology.dev/2024/07/24…

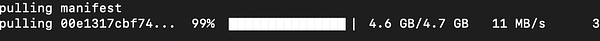

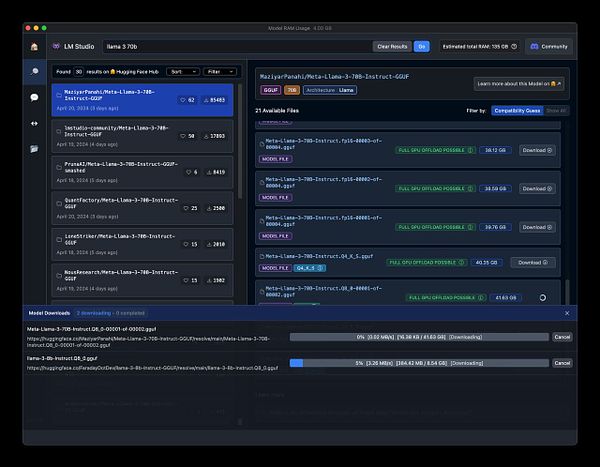

Playing with llama2 locally and running it from the first time on my machine

more fun with LLAMA2 and figuring out how to better control/predict stable output

got llamacode working locally and it's really good

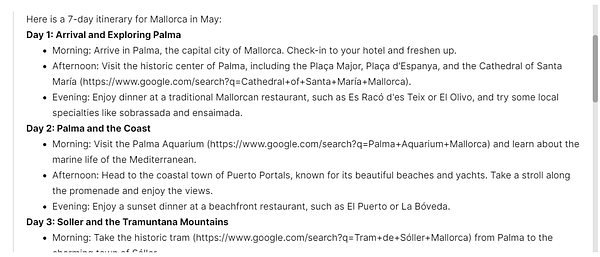

try client side web based Llama 3 in JS  #life webllm.mlc.ai/

#life webllm.mlc.ai/

got llama3 on groq working with cursor 🤯

worked on llama2 experiments mostly to see how easy it is to break it and how good the overall quality is.

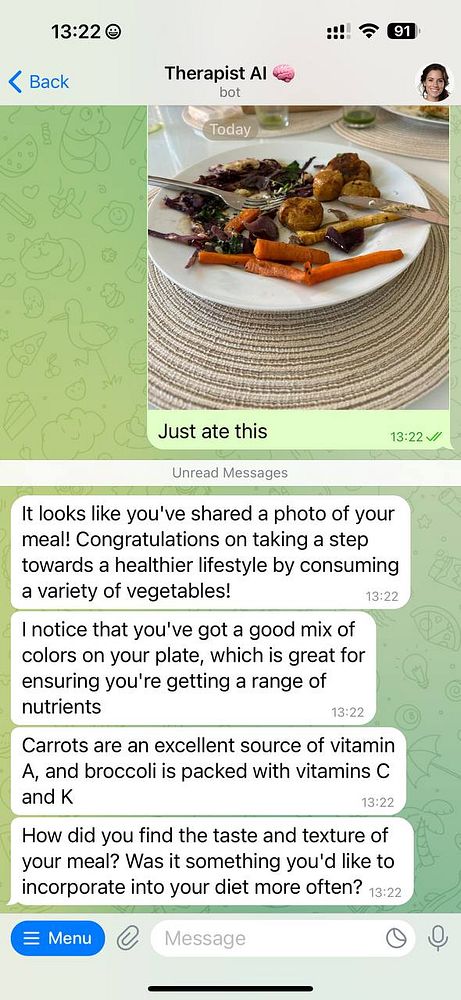

test Vision model coop with Llama3  #therapistai

#therapistai

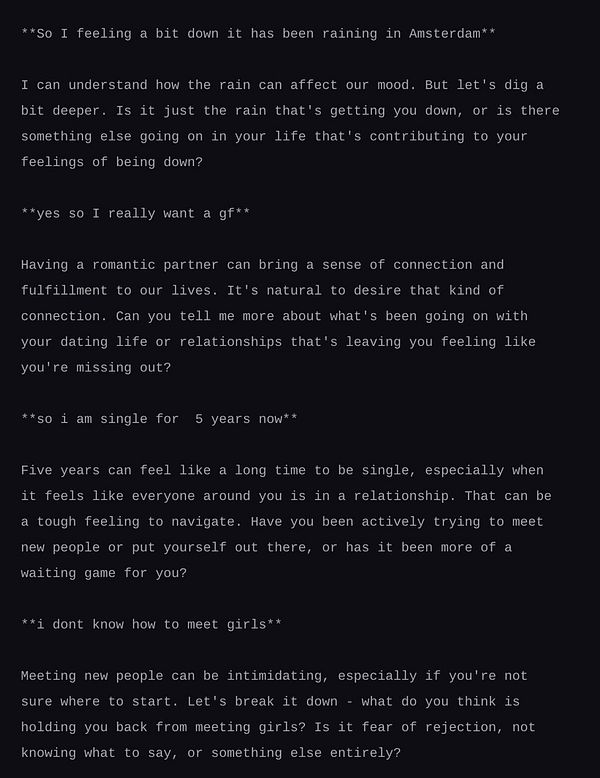

test  #therapistai with Llama 3-70B

#therapistai with Llama 3-70B

prototype a simple autocomplete using local llama2 via Ollama  #aiplay

#aiplay