Back

Similar todos

Load previous page…

read up on llm embedding to start building something new with ollama

🤖 more working with Ollama and Llama 3.1 and working on a story writer as a good enough demo.  #research

#research

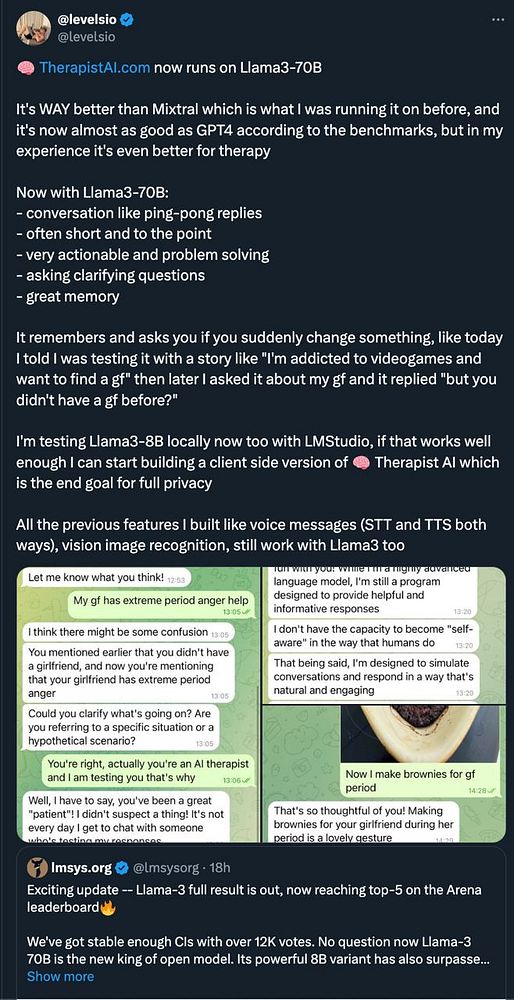

switch  #therapistai fully to Llama3-70B

#therapistai fully to Llama3-70B

🤖 got llama-cpp running locally 🐍

🤖 Updated some scripts to use Ollama's latest structured output with Llama 3.3 (latest) and fell back to Llama 3.2. I drop from >1 minute with 3.3 down to 2 to 7 seconds per request with 3.2. I can't see a difference in the results. For small projects 3.2 is the better path.  #research

#research

Over the past three days and a half, I've dedicated all my time to developing a new product that leverages locally running Llama 3.1 for real-time AI responses.

It's now available on Macs with M series chips – completely free, local, and incredibly fast.

Get it: Snapbox.app  #snapbox

#snapbox

tested llama model to scan newsletters  #sponsorgap

#sponsorgap

🤖 played with Aider and it mostly working with Ollama + Llama 3.1  #research

#research

start new project #ll

installed cody to cursor, so that i can use llama3.1 and gemma2 via ollama  #astronote

#astronote  #leifinlavida

#leifinlavida

🤖 lots of AI research last night including writing a functional story bot to wrap my head around how to apply step-by-step logic and get something meaningful out of llama2  #research

#research

PR to LlamaIndex merged. OpenAPI Spec loader :)

install llama2 and come up with a rough draft of a story writing tool with Python. This is deceptively easier than it should be.

🤖 played with Ollama's tool calling with Llama 3.2 to create a calendar management agent demo  #research

#research

use llama3 70b to create transcript summary  #spectropic

#spectropic

more llm deep diving with Falcon 👀

More Ollama experimenting  #research

#research

new article #llfr