Back

Similar todos

Load previous page…

work on setting up the system locally #labs

removed all ollama models except one  #leifinlavida

#leifinlavida

🤖 tried to setup tailscale serve to access Ollama and it's not happy

📝 prototyped an llm-ollama plugin tonight. models list and it talks to the right places. prompts need more work.

🤖 got llama-cpp running locally 🐍

🤖 Updated some scripts to use Ollama's latest structured output with Llama 3.3 (latest) and fell back to Llama 3.2. I drop from >1 minute with 3.3 down to 2 to 7 seconds per request with 3.2. I can't see a difference in the results. For small projects 3.2 is the better path.  #research

#research

start new project #ll

setup local ssl environement  #pocketpager

#pocketpager

setup local  #chocolab dev environment

#chocolab dev environment

🤖 played with Ollama's tool calling with Llama 3.2 to create a calendar management agent demo  #research

#research

homework 5 of LLMOps bootcamp  #llms

#llms

setup local dev env  #sanderfish

#sanderfish

setup local dev env  #chime

#chime

install laravel project and setup

setup project locally  #entryleveljobs

#entryleveljobs

local project setup  #keyframes

#keyframes

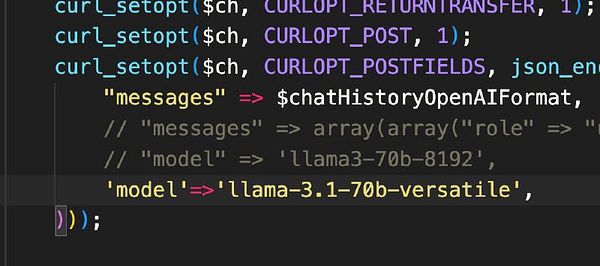

switch  #therapistai to Llama 3.1

#therapistai to Llama 3.1

Got whatdo and lets-play running locally

reading paper about LLM deployments  #aiplay

#aiplay