Back

Similar todos

Load previous page…

worked on llama2 experiments mostly to see how easy it is to break it and how good the overall quality is.

Over the past three days and a half, I've dedicated all my time to developing a new product that leverages locally running Llama 3.1 for real-time AI responses.

It's now available on Macs with M series chips – completely free, local, and incredibly fast.

Get it: Snapbox.app  #snapbox

#snapbox

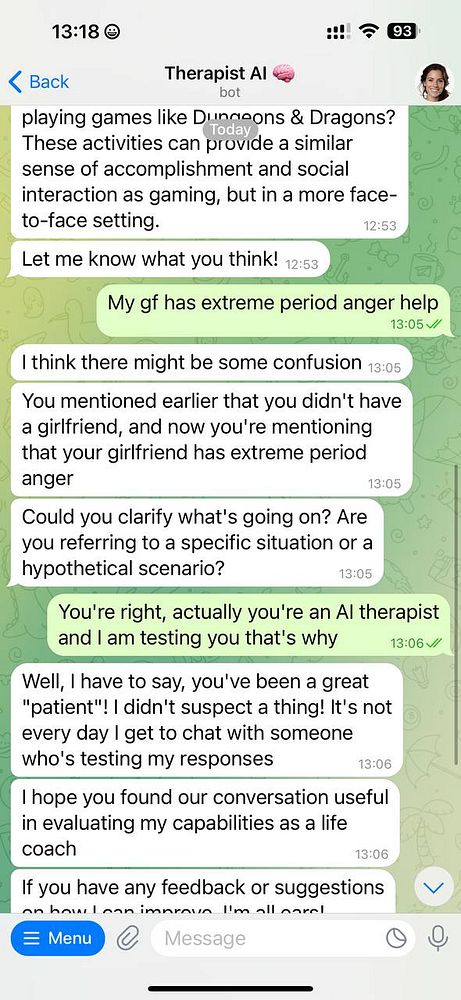

realize  #therapistai with Llama3-70B actually understands WTF is going on now

#therapistai with Llama3-70B actually understands WTF is going on now

🦙 test flight Llama Life iPhone app, send review

installed cody to cursor, so that i can use llama3.1 and gemma2 via ollama  #astronote

#astronote  #leifinlavida

#leifinlavida

prototype a simple autocomplete using local llama2 via Ollama  #aiplay

#aiplay

use llama3 70b to create transcript summary  #spectropic

#spectropic

read llama guard paper  #aiplay

#aiplay

got llamacode working locally and it's really good

FINALLY! Made the canvas work. First time using a combo of Llama 3.1, Claude Sonnet 3.5 and ChatGPT, but the trickiest parts were mostly solved by Llama 3.1. Looks like Claude is better for coding with more conventions, not more uncommon stuff like canvas, while I'm pleasantly surprised Llama 3.1 can deliver on it! Now, what should I call this new project... #indiejourney

🤖 played with Ollama's tool calling with Llama 3.2 to create a calendar management agent demo  #research

#research

🦙 install Llama Life iOS app. leave 5-star rating and a review

🤖 got llama-cpp running locally 🐍