Back

Similar todos

Run openllm dollyv2 on local linux server

installed cody to cursor, so that i can use llama3.1 and gemma2 via ollama  #astronote

#astronote  #leifinlavida

#leifinlavida

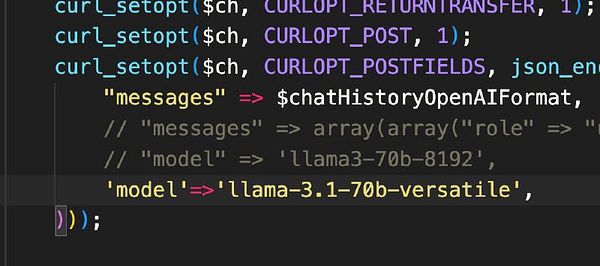

switch  #therapistai to Llama 3.1

#therapistai to Llama 3.1

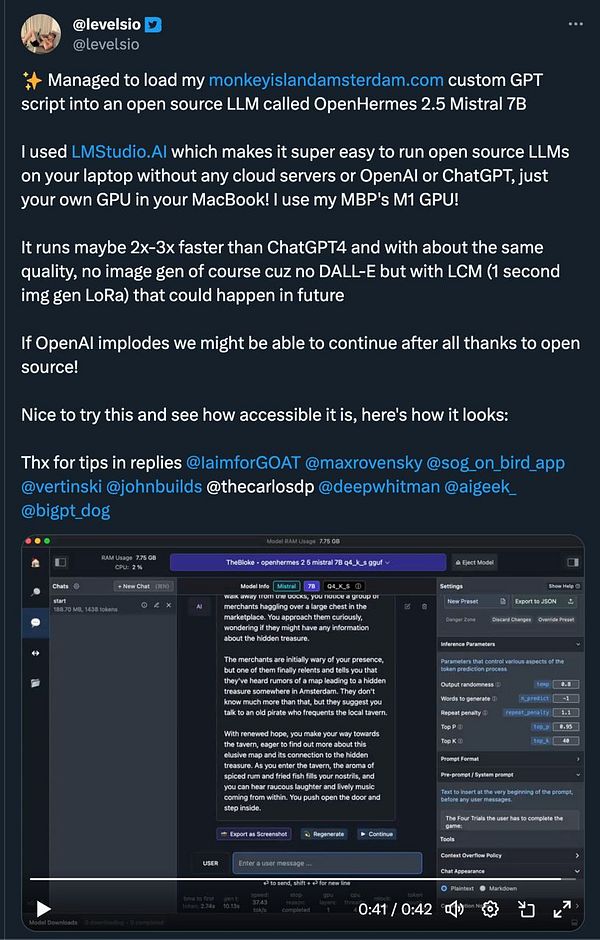

try load  #monkeyisland in my own local LLM

#monkeyisland in my own local LLM

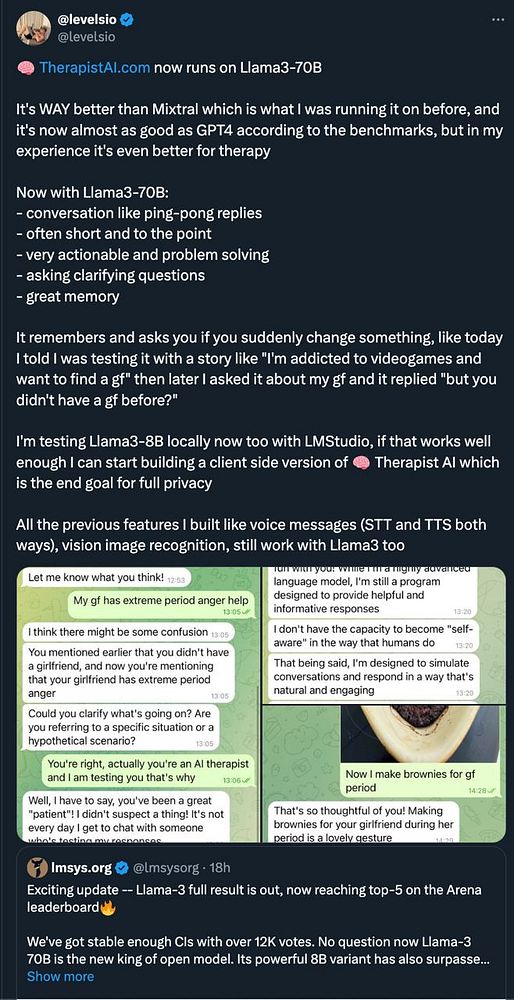

test  #therapistai with Llama 3-70B

#therapistai with Llama 3-70B

implemented ollama local models  #rabbitholes

#rabbitholes

check out Llama 3.1  #life

#life

plugin comparison  #ekh

#ekh

test OpenHermes 2.5 Mistral LLM

🤖 Tried out Llama 3.3 and the latest Ollama client for what feels like flawless local tool calling.  #research

#research

switch  #therapistai fully to Llama3-70B

#therapistai fully to Llama3-70B

Ran some local LLM tests 🤖

published a test package

testing hookmonitor  #integratewp

#integratewp

🤖 spent some time getting Ollama and langchain to work together. I hooked up tooling/function calling and noticed that I was only getting a match on the first function call. Kind of neat but kid of a pain.

read llama guard paper  #aiplay

#aiplay

add pre-commit git hook testing lambda code