Back

Similar todos

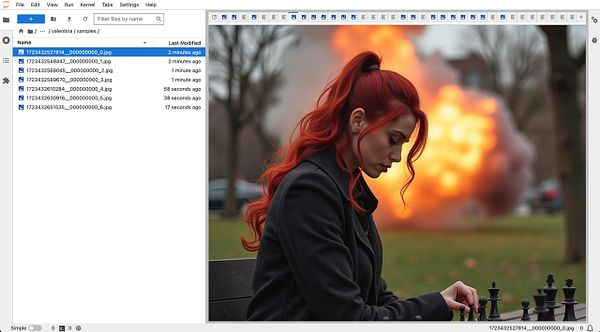

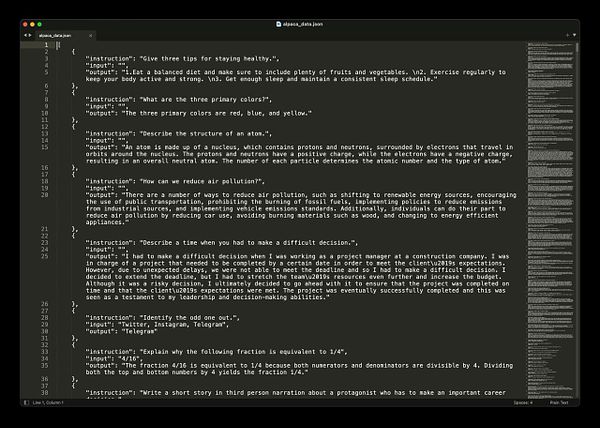

got actually working dataset for clv  #ml4all

#ml4all

provision batch of L4 and L40S GPUs at Scaleway for  #mirage since our account got validated and quotas lifted

#mirage since our account got validated and quotas lifted

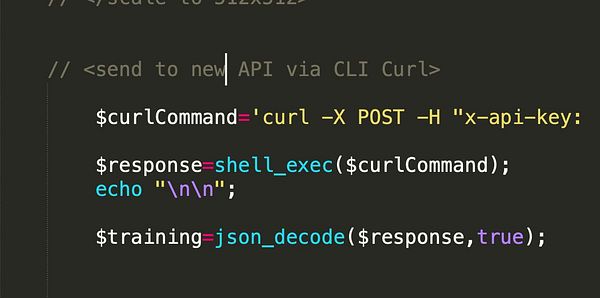

Work on  #nichewit, pull in production datasets to iterate bit faster locally

#nichewit, pull in production datasets to iterate bit faster locally

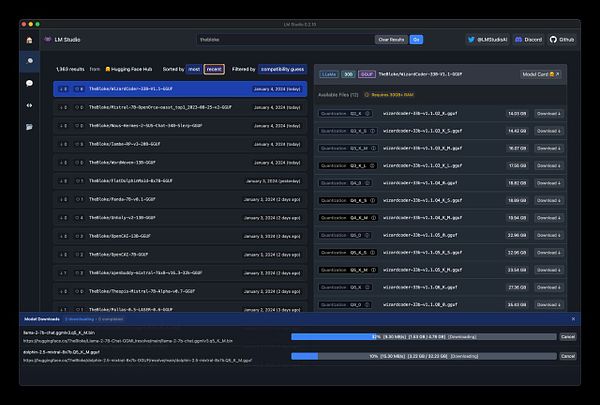

finish migrating  #mirage kubernetes intel and nvidia gpu instances to scaleway, getting last-generation NVIDIA L40S + L4 GPUs, running much smoother now! (previously: old A40 and A16)

#mirage kubernetes intel and nvidia gpu instances to scaleway, getting last-generation NVIDIA L40S + L4 GPUs, running much smoother now! (previously: old A40 and A16)

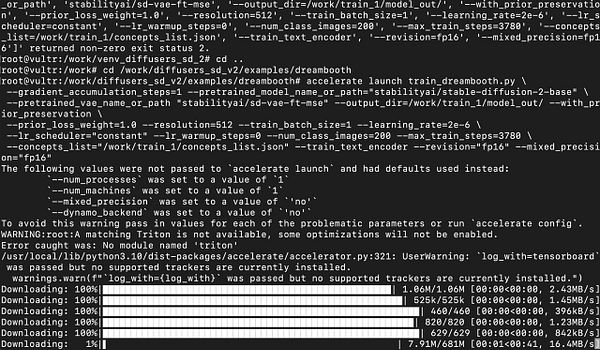

setup more powerful vm for processing data & training models  #mused

#mused

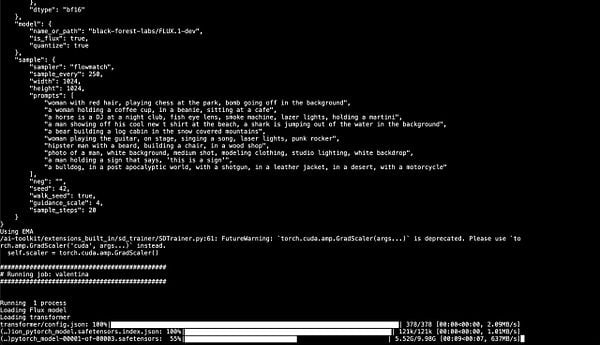

Updating Automatic 11, Installing a new video gpu, today its a day to learn how to train local models :B  #dailywork

#dailywork

Chunk dataset big files and import to DB (Data Science project)  #zg

#zg

Prepare datasets for demo