Back

Similar todos

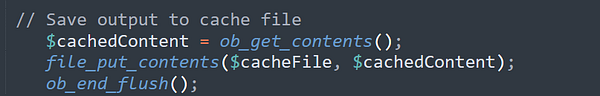

add model caching  #ctr

#ctr

double the cache time for recs

try and cache some common but somewhat slow calculations  #blip

#blip

Set up Memcached / fragment caching for  #postcard to get that last bit of speed (and scalability!)

#postcard to get that last bit of speed (and scalability!)

Set up caching for CI to get a small speedup  #newsletty

#newsletty

pricing analysis and improvemnts for storing cache on backend  #docgptai

#docgptai

use cache for algolia results cuz getting expensive  #japandev

#japandev

basic cache for urql  #there

#there

cache estimate lookup  #postman

#postman

Cache results to improve search functionality #greenjobshunt

finish saving of training data  #misc

#misc

Create DB dataframes for faster inference

cache images locally

converted all the heavy data points to use cache  #coinlistr

#coinlistr