Back

Similar todos

Load previous page…

try client side web based Llama 3 in JS  #life webllm.mlc.ai/

#life webllm.mlc.ai/

got llama3 on groq working with cursor 🤯

got distracted with my writer AIs — testing between llama3.1 vs gemma2  #leifinlavida

#leifinlavida

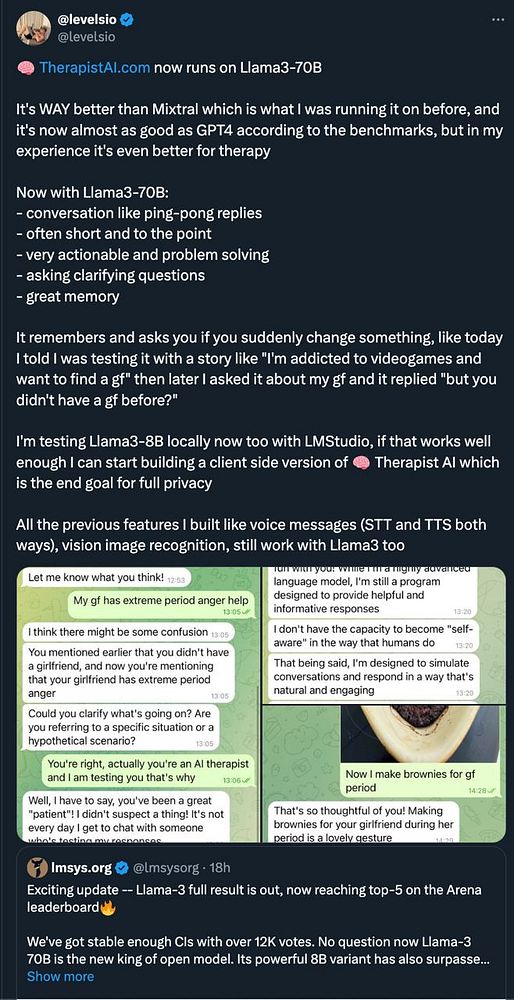

switch  #therapistai fully to Llama3-70B

#therapistai fully to Llama3-70B

🤖 more working with Ollama and Llama 3.1 and working on a story writer as a good enough demo.  #research

#research

Playing with llama2 locally and running it from the first time on my machine

I used Claude 3.5 Project + Artifacts to help refactor most of the Ollama + Llama 3.1  #research project. I would call it a writing bot, but I'm not building it to automate writing. It's mostly a content wrapper around the Chat interface but it's good at generating code and building off of chat history.

#research project. I would call it a writing bot, but I'm not building it to automate writing. It's mostly a content wrapper around the Chat interface but it's good at generating code and building off of chat history.

use llama3 70b to create transcript summary  #spectropic

#spectropic