Back

Jason Wu

Load previous page…

Switch the editorial pipeline to use o1, with the same data collected, result is much better structured, and highlights more important stuff  #contentcreation

www.blockrundown.com/article/…

#contentcreation

www.blockrundown.com/article/…

Publish new episode of "Next In AI", about the foundational Google paper revealing the secret of o1

open.spotify.com/episode/3Lcu…

[Scaling LLM Test-Time Compute Optimally can be More Effective than Scaling Model Parameters] #nextinai

Publish AI generated paper reading podcast "Next In AI", this episode is about "Let's verify step by step" underlying OpenAI's o1 model:

open.spotify.com/episode/0mmG…

Is this helpful? #nextinai

Read [Scaling LLM Test-Time Compute Optimally can

be More Effective than Scaling Model Parameters](arxiv.org/pdf/2408.03314)

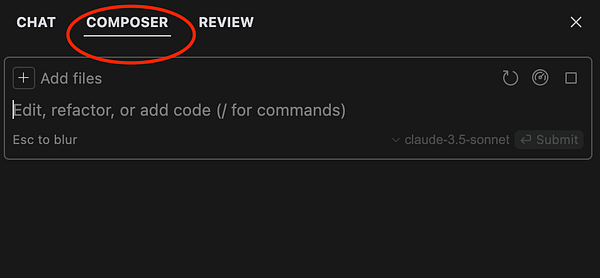

my impression with o1 so far: it requires more "thinking" from the human as well. To get the best result, I find myself writing a well thought through "letter" to the model vs. rapid fire "sms" to a simpler model without the reasoning capabilities. And yes, I create that "letter" with a simpler model during the initial "exploration" phase.